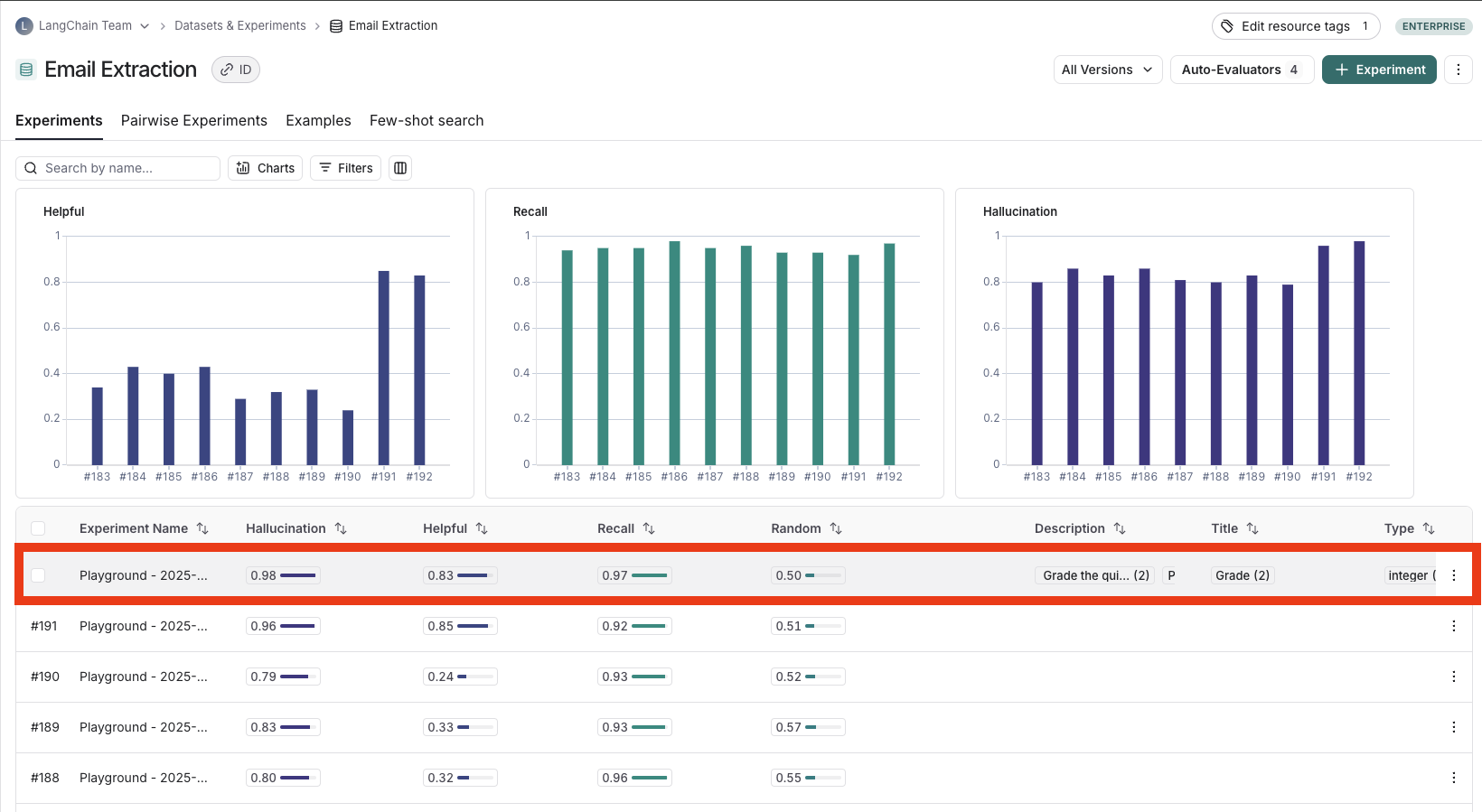

Analyze a single experiment

After running an experiment, you can use LangSmith's experiment view to analyze the results and draw insights about how your experiment performed.

This guide will walk you through viewing the results of an experiment and highlights the features available in the experiments view.

Open the experiment view

To open the experiment view, select the relevant Dataset from the Dataset & Experiments page and then select the experiment you want to view.

View experiment results

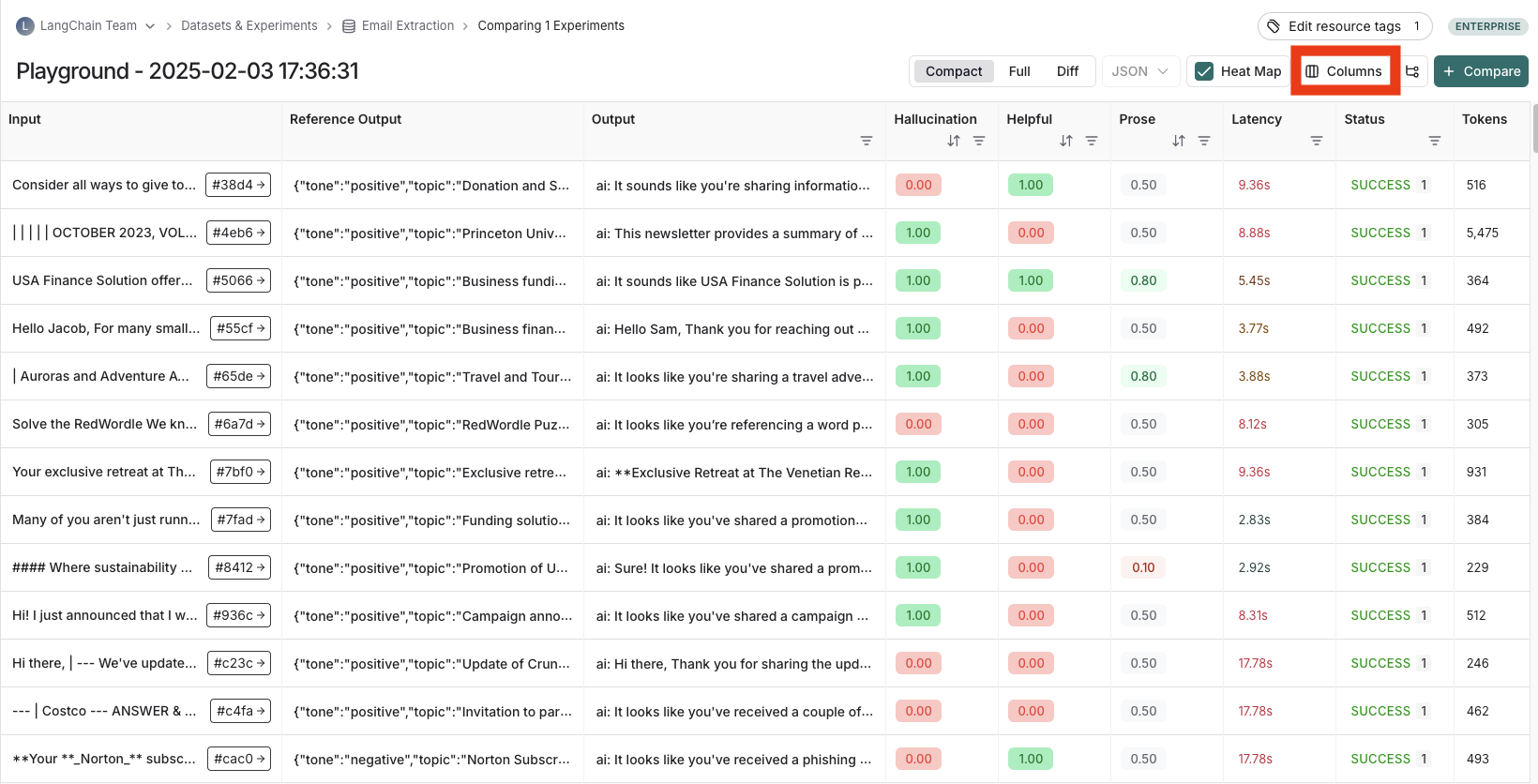

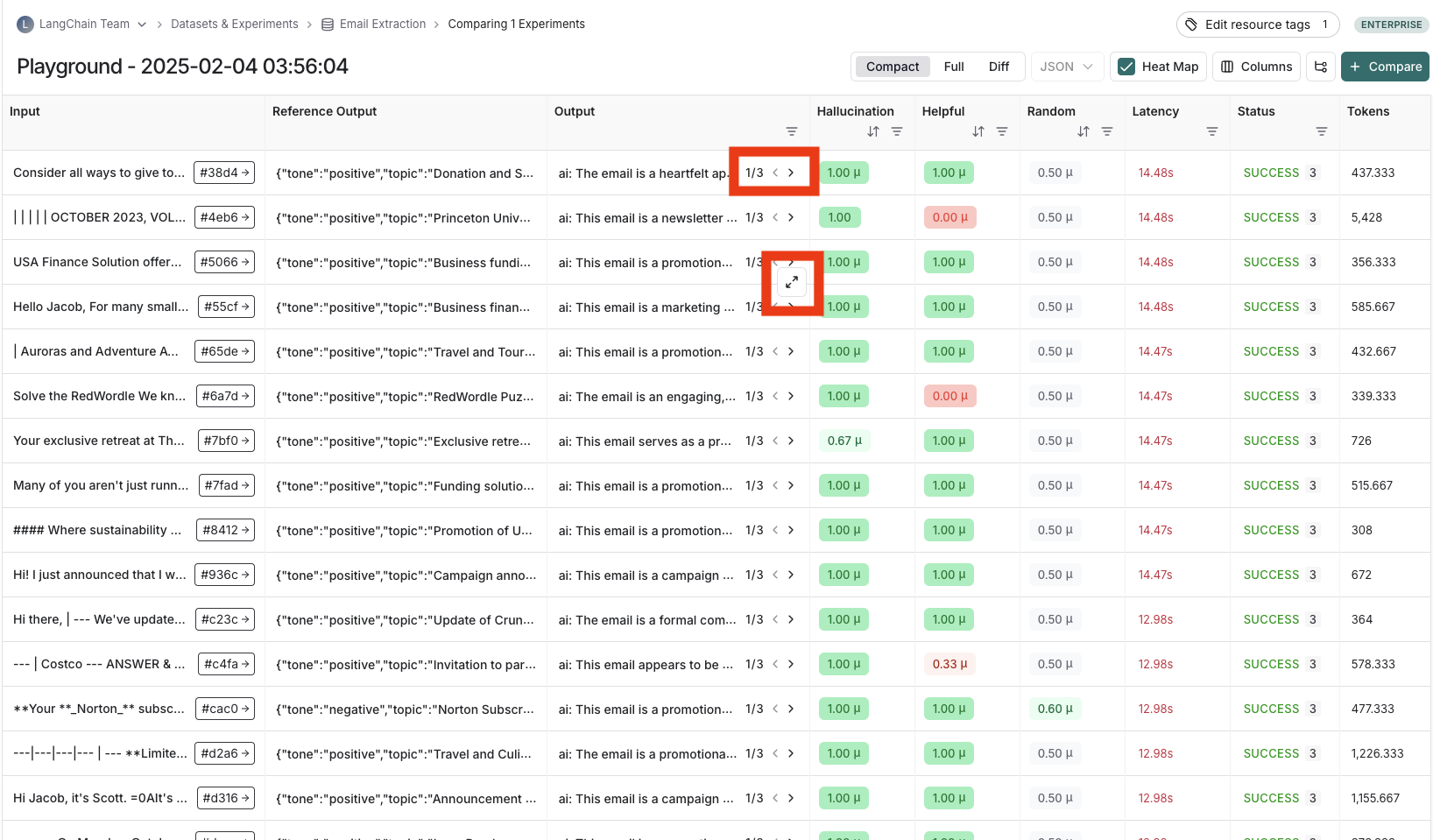

This table displays your experiment results. This includes the input, output, and reference output for each example in the dataset. It also shows each configured feedback key in separate columns alongside its corresponding feedback score.

Out of the box metrics (latency, status, cost, and token count) will also be displayed in individual columns.

In the columns dropdown, you can choose which columns to hide and which to show.

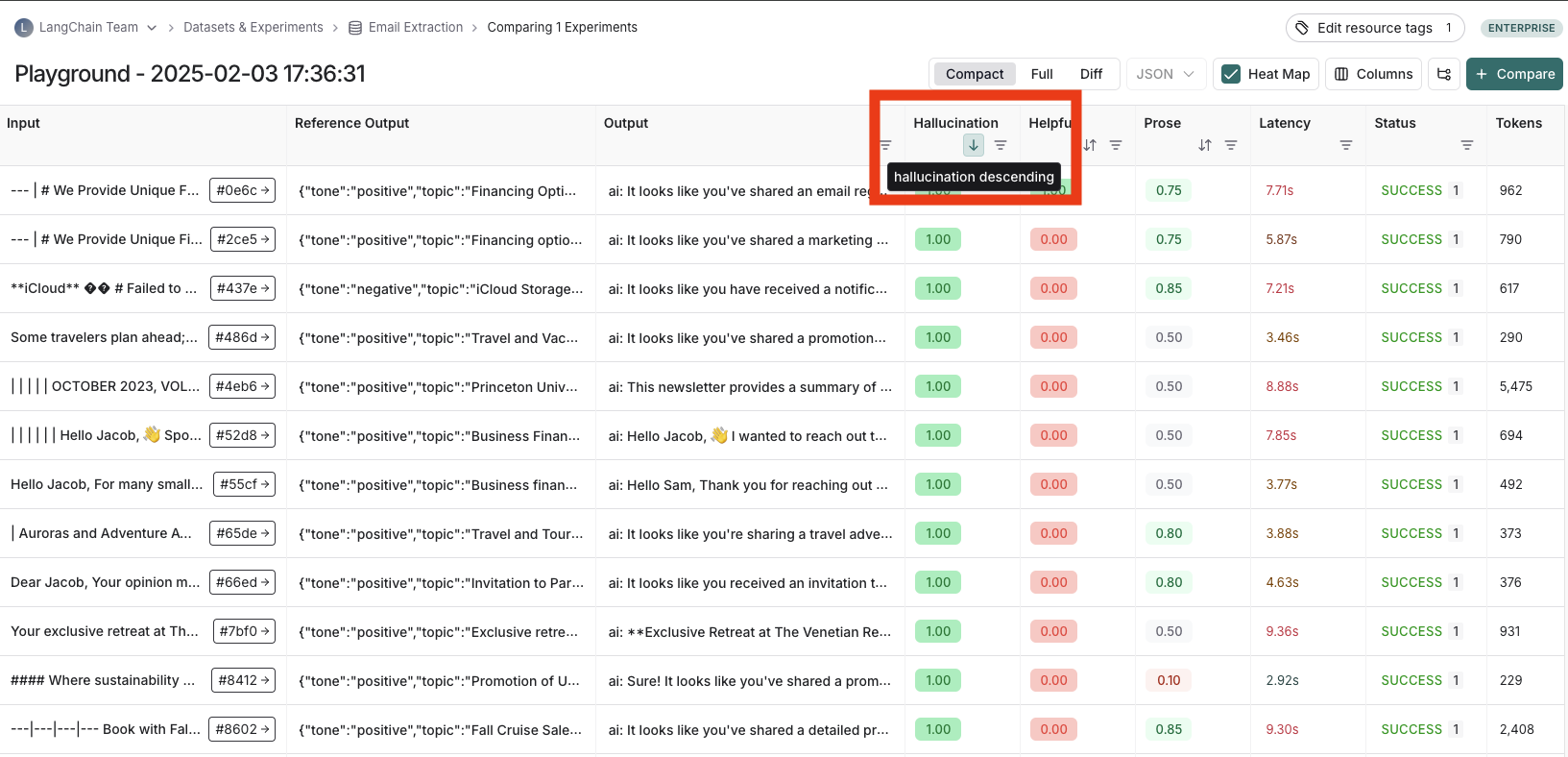

Heatmap view

The experiment view defaults to a heatmap view, where feedback scores for each run are highlighted in a color. Red indicates a lower score, while green indicates a higher score. The heatmap visualization makes it easy to identify patterns, spot outliers, and understand score distributions across your dataset at a glance.

Sort and filter

To sort or filter feedback scores, you can use the actions in the column headers.

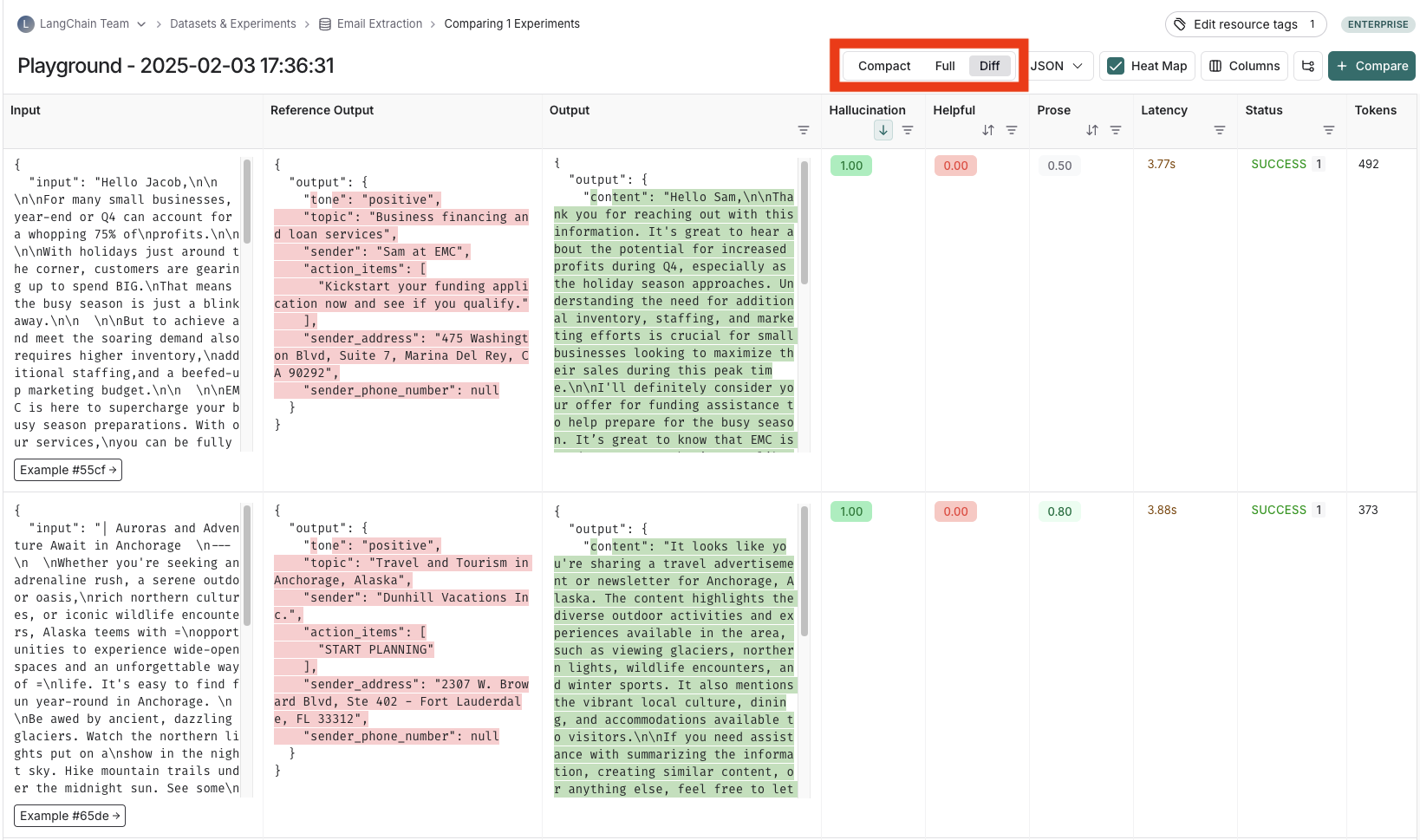

Table views

Depending on the view most useful for your analysis, you can change the formatting of the table by toggling between a compact view, a full, view, and a diff view.

- The

Compactview shows each run as a one-line row, for ease of comparing scores at a glance. - The

Fullview shows the full output for each run for digging into the details of individual runs. - The

Diffview shows the text difference between the reference output and the output for each run.

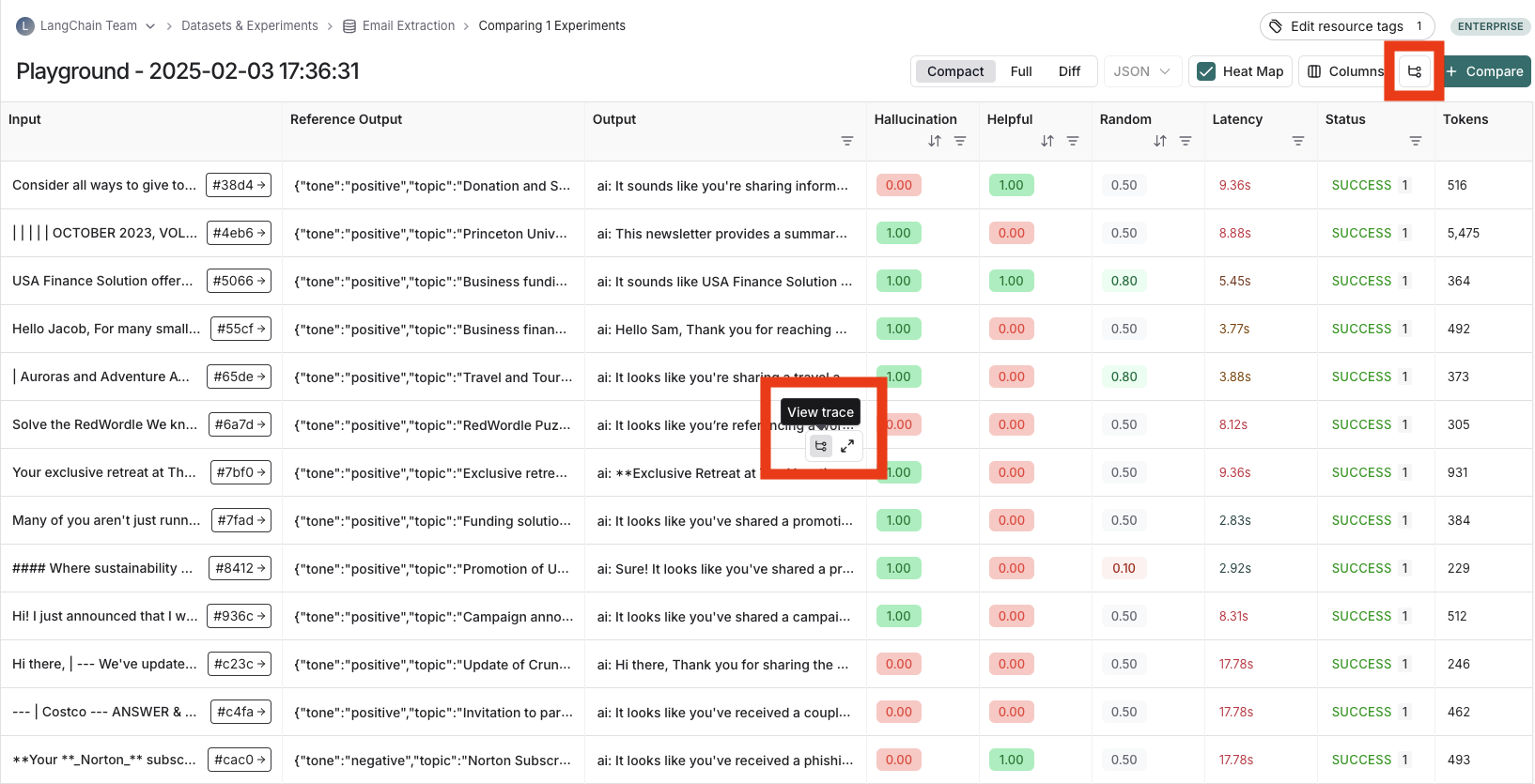

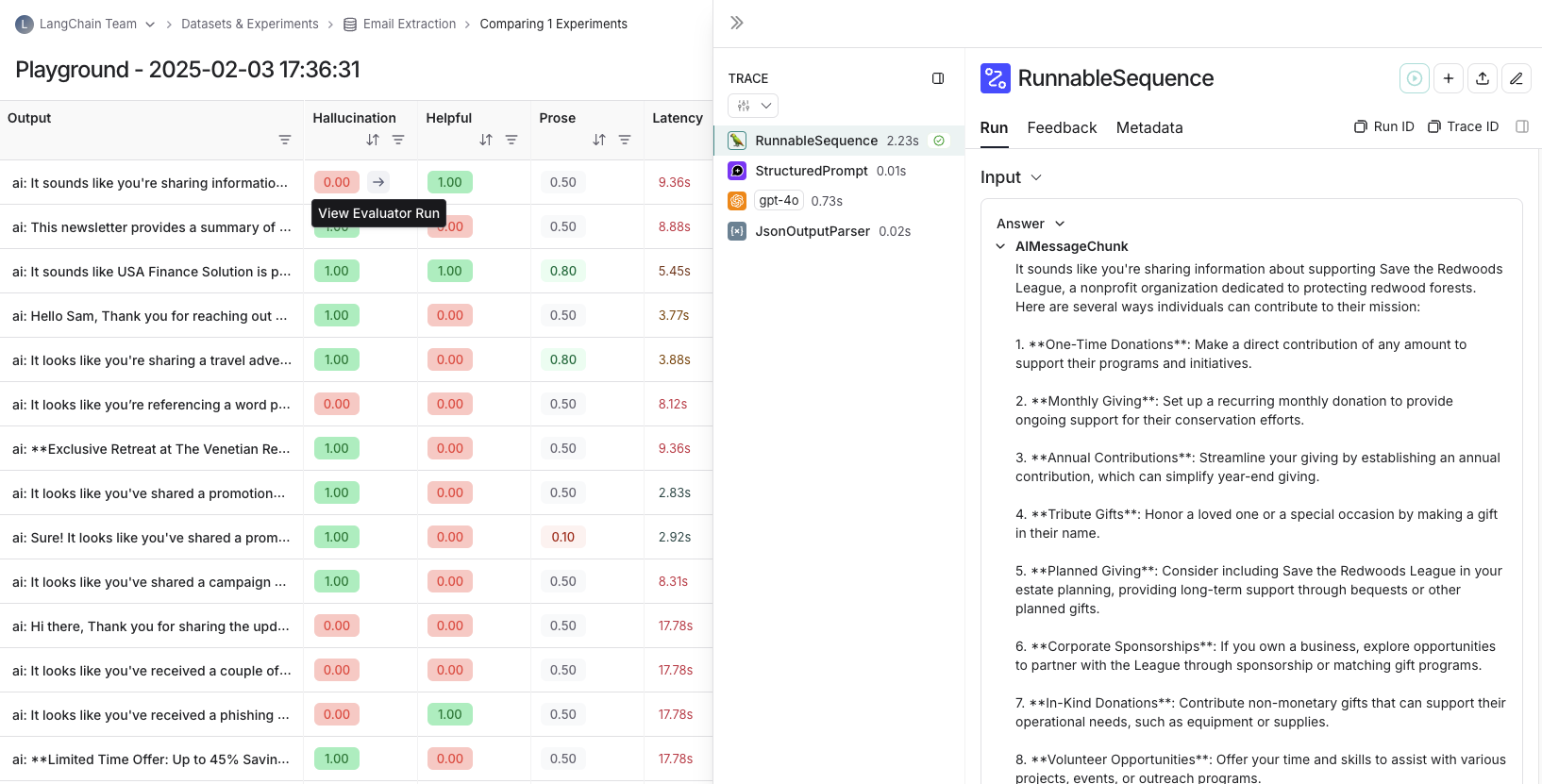

View the traces

Hover over any of the output cells, and click on the trace icon to view the trace for that run. This will open up a trace in the side panel.

To view the entire tracing project, click on the "View Project" button in the top right of the header.

View evaluator runs

For evaluator scores, you can view the source run by hovering over the evaluator score cell and clicking on the arrow icon. This will open up a trace in the side panel. If you're running a LLM-as-a-judge evaluator, you can view the prompt used for the evaluator in this run. If your experiment has repetitions, you can click on the aggregate average score to find links to all of the individual runs.

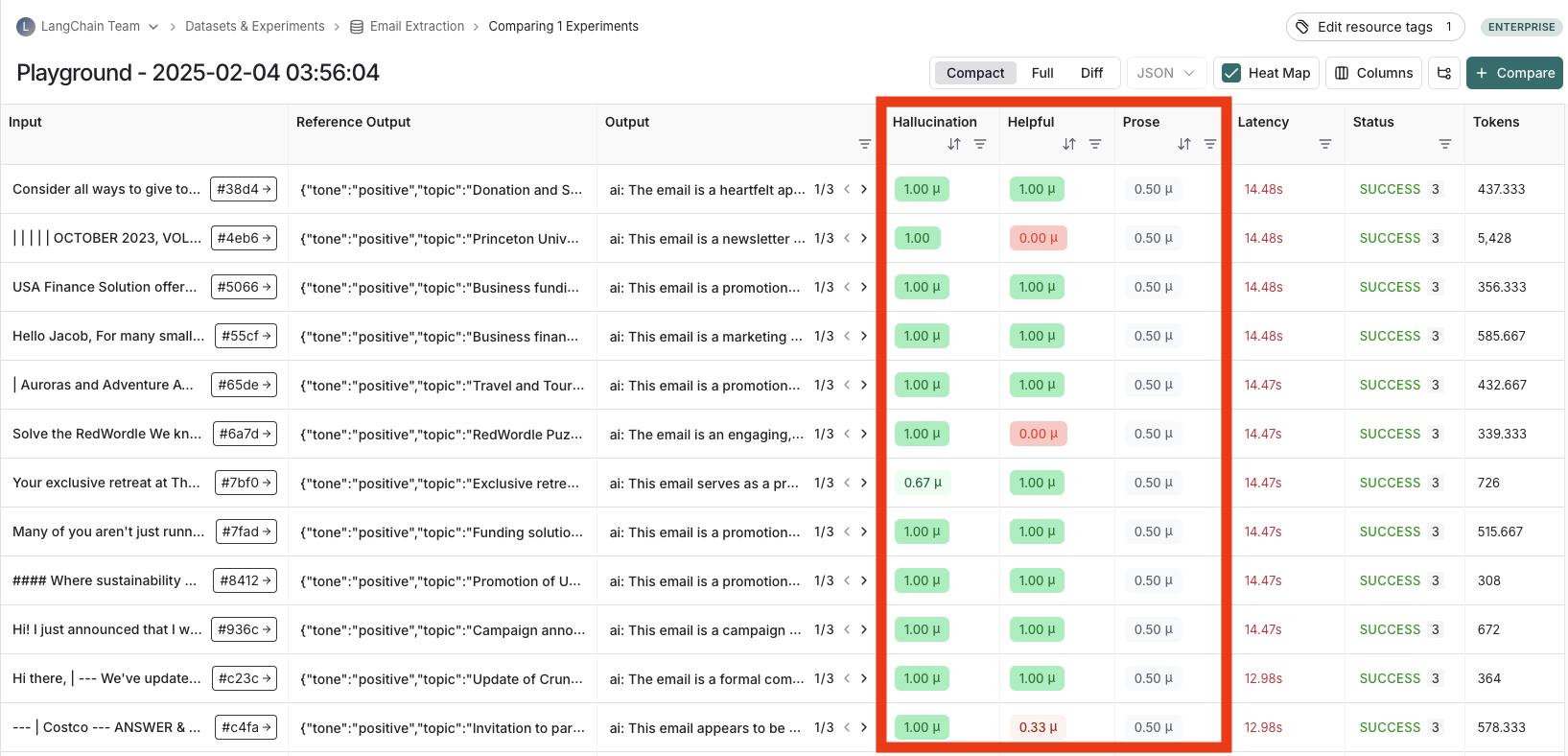

Repetitions

If you've run your experiment with repetitions, there will be arrows in the output results column so you can view outputs in the table. To view each run from the repetition, hover over the output cell and click the expanded view.

When you run an experiment with repetitions, LangSmith displays the average for each feedback score in the table. Click on the feedback score to view the feedback scores from individual runs, or to view the standard deviation across repetitions.

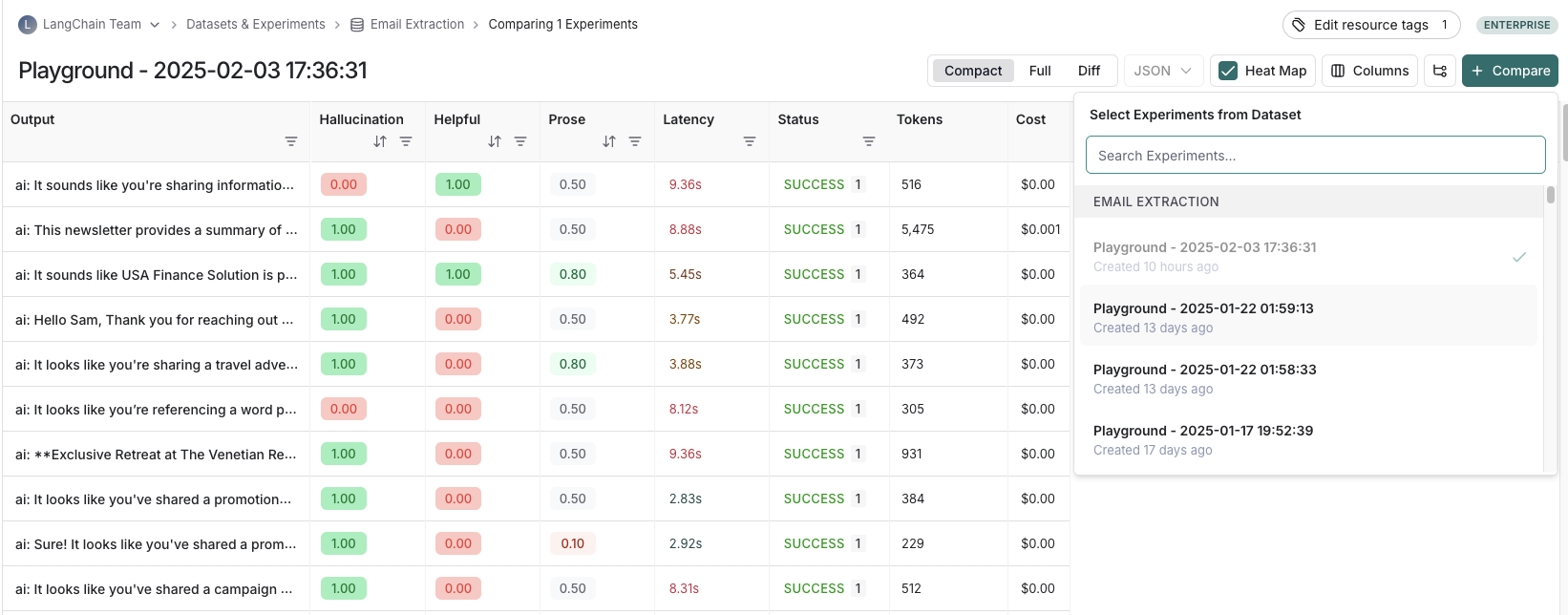

Compare to another experiment

In the top right of the experiment view, you can select another experiment to compare to. This will open up a comparison view, where you can see how the two experiments compare. To learn more about the comparison view, see how to compare experiment results.